Cloudflare’s Second Outage in Two Weeks Proves Why Global Configs Are Infrastructure’s Silent Killer

Two weeks after taking down half the internet, Cloudflare did it again. Last Friday, another global outage lasted 25 minutes, impacting 28% of Cloudflare’s HTTP traffic. The cause? A seemingly innocent configuration change that propagated globally before anyone could stop it.

This wasn’t a sophisticated attack or exotic hardware failure. It was a killswitch toggle that went wrong.

The Core Insight

Here’s what happened: Cloudflare was rolling out a fix for a React security vulnerability. The fix caused an error in an internal testing tool. An engineer disabled the testing tool with a global killswitch. That killswitch unexpectedly triggered a bug causing HTTP 500 errors across Cloudflare’s entire network.

The previous outage in November? Also a global configuration change—a database permissions update that propagated instantly worldwide.

After November’s incident, Cloudflare’s postmortem included this action item: “Hardening ingestion of Cloudflare-generated configuration files in the same way we would for user-generated input.” In other words: treat internal configs with the same paranoia as external inputs.

That fix wasn’t implemented yet. It came back to bite them.

This pattern isn’t unique to Cloudflare. Some of the largest outages in recent years share the same DNA:

- Meta’s 7-hour outage (2021): BGP configuration change propagated globally

- AWS outage (October 2024): Internal DNS system configuration

- Datadog’s $5M outage (2023): Ubuntu machines executing OS updates simultaneously worldwide

- Google Cloud (2024): A quota policy change replicated globally “within seconds,” crashing every Spanner database node

Global configuration changes are infrastructure’s silent killer.

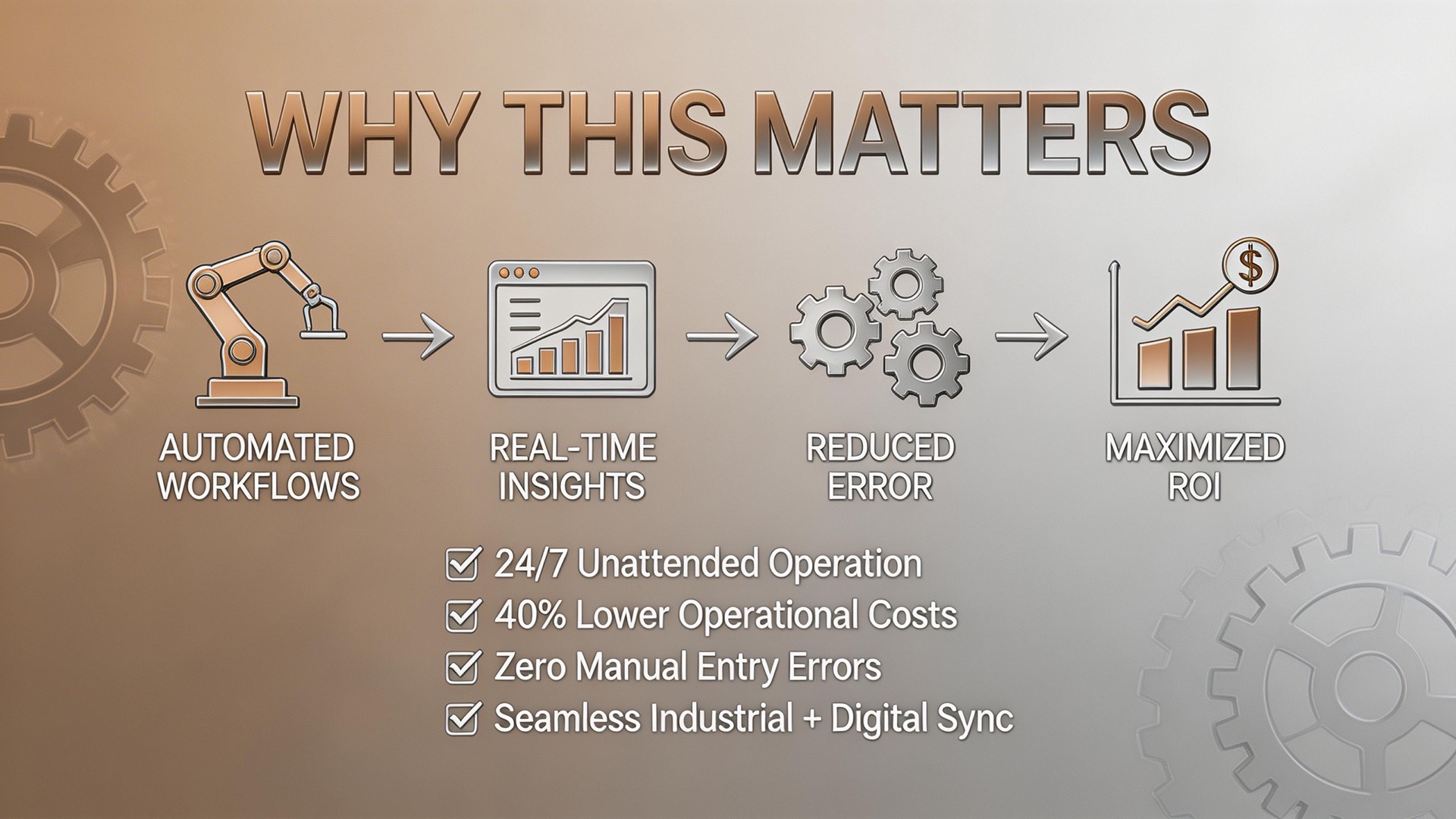

Why This Matters

Cloudflare’s value proposition rests on reliability. Customers pay for the confidence that they don’t need a backup CDN. Two major outages with similar root causes in two weeks undermines that foundation.

But this isn’t just a Cloudflare problem. Any system that propagates configuration globally—and that’s most modern infrastructure—carries the same risk.

CTO Dane Knecht’s reflection was brutally honest:

“These kinds of incidents, and how closely they are clustered together, are not acceptable for a network like ours.”

Cloudflare is now prioritizing:

– Enhanced Rollouts & Versioning: Configuration changes get the same staged rollout as code deployments

– Fail-Open Error Handling: Corrupt configs should degrade gracefully, not crash hard

– Break Glass Capabilities: Critical operations must work even when standard systems are compromised

Key Takeaways

Global config changes should never be instant: The speed that makes modern infrastructure powerful is also what makes failures catastrophic.

Staged rollouts for configs are hard but necessary: Rolling out configuration changes incrementally means building significant infrastructure that slows everything down. That friction is the feature.

The visibility problem is real: When configs work, their instant propagation is invisible. When they fail, 28% of your traffic errors simultaneously.

Internal tools need external paranoia: The killswitch that caused this outage was internal infrastructure, not customer-facing. It still brought down the network.

Two similar outages in two weeks is a credibility crisis: Customers will start pricing in backup CDN costs, and competitors will capitalize.

Looking Ahead

The engineering required to make configuration rollouts safe is substantial—and thankless. When it works, nothing happens. The reward is the absence of incidents, which is hard to demonstrate on a quarterly report.

But after this week, Cloudflare has no choice. Neither does anyone else running global infrastructure.

The irony is brutal: in trying to fix a security vulnerability quickly, Cloudflare triggered an outage. Speed and safety are in tension. The companies that figure out how to balance both will win the reliability game.

For the rest of us, there’s a simpler lesson: any system that can change everything at once can also break everything at once. Plan accordingly.

Based on analysis of “The Pulse: Cloudflare’s latest outage proves dangers of global configuration changes (again)” from Pragmatic Engineer

Tags: #DevOps #Infrastructure #Reliability #SRE #CloudComputing