Discord’s Global Age Verification: Teen Safety vs. Privacy in the Platform Era

As Discord rolls out mandatory age checks worldwide, the tension between protecting minors and preserving user privacy reaches a new inflection point

Discord announced this week that it’s rolling out age verification globally starting in March 2026. All users will be placed into a “teen-appropriate experience” by default, with access to age-restricted content, channels, and features limited to verified adults only.

The timing is notable: this announcement comes just four months after Discord disclosed that around 70,000 users may have had their government ID photos exposed when hackers breached a third-party age verification vendor.

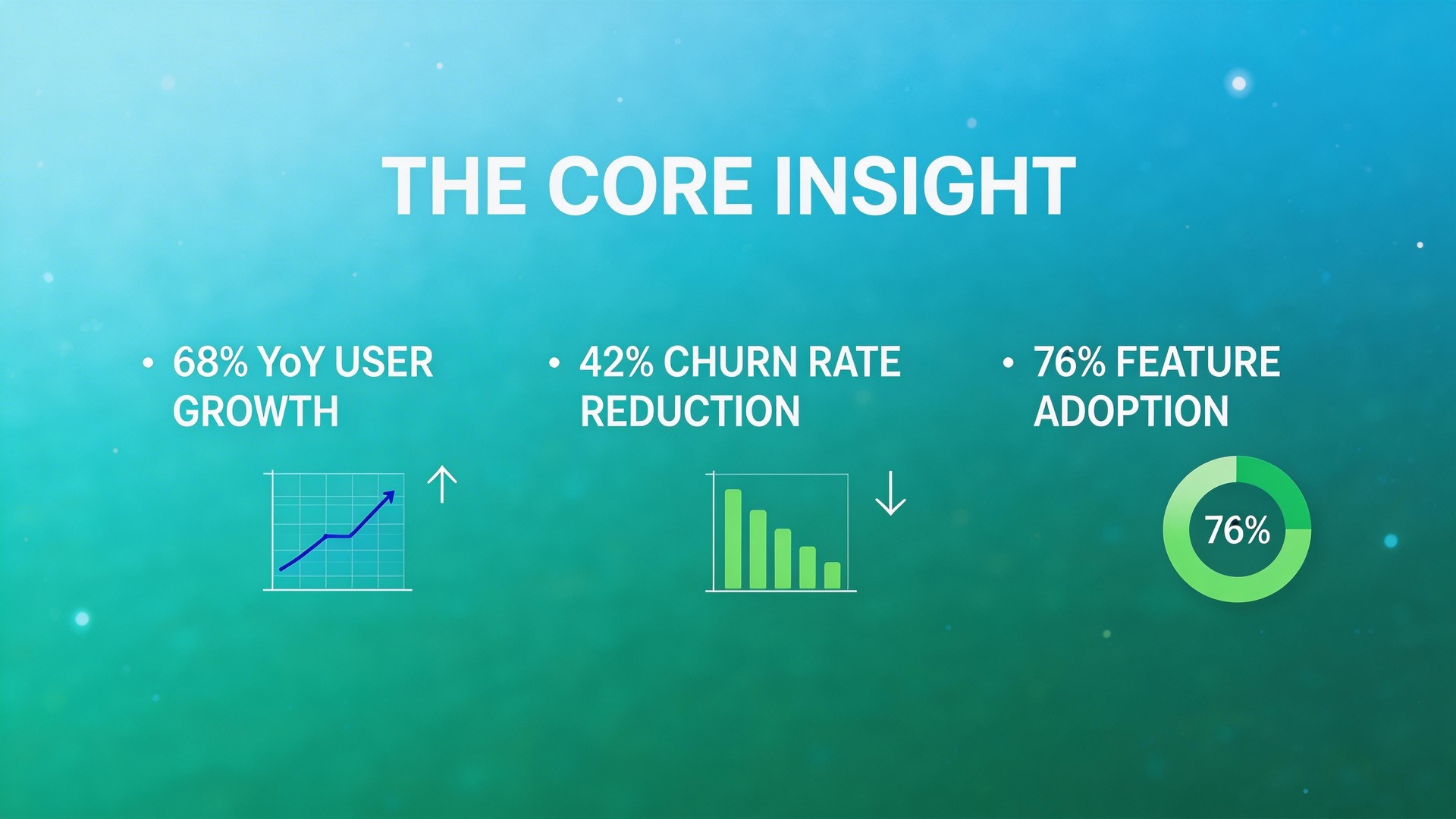

The Core Insight

Discord is walking a tightrope that every major platform must eventually cross: how do you protect minors without creating privacy risks for everyone?

Their approach involves two primary verification methods:

1. Facial age estimation via video selfies (which Discord claims never leave your device)

2. Government ID submission to vendor partners (deleted “quickly and, in most cases, immediately” after verification)

For most adult users, Discord says verification won’t be required at all—their “age inference model” uses account tenure, device data, activity patterns, and aggregated community data to make determinations. Private messages are explicitly excluded from this analysis.

Why This Matters

The Privacy Paradox:

Every age verification system creates a database of sensitive information—facial biometrics, government IDs, or behavioral profiles. These databases become high-value targets. Discord’s October 2024 breach proved this isn’t theoretical: real user IDs were exposed.

Digital rights groups like the EFF have consistently argued that age verification requirements will “hurt more than they help.” The fundamental problem: you can’t verify age without collecting identity data, and you can’t collect identity data without creating attack surfaces.

The Regulatory Pressure:

Discord isn’t acting in a vacuum. The platform established age checks for UK and Australian users last year. Roblox now requires mandatory facial verification for chat access globally. YouTube rolled out age-estimation technology for US teens. There’s a clear regulatory trend toward platforming assuming responsibility for age-appropriate content delivery.

The New Default Experience:

The changes are substantial:

– Messages from unknown users are routed to a separate inbox by default

– Friend requests from unknown users trigger warning prompts

– Speaking onstage in servers requires adult verification

– Age-restricted channels, servers, and app commands are locked behind verification

This fundamentally changes how Discord works for unverified users—intentionally creating friction around the features most likely to expose minors to risk.

Key Takeaways

Inference before verification: Discord’s approach prioritizes passive age inference over active verification. Most adults won’t need to submit IDs or selfies—the system will infer their age from account behavior.

Third-party vendor risk is real: The October breach exposed real consequences of age verification infrastructure. Users submitting IDs should understand their data passes through external processors.

The platform landscape is converging: Discord, Roblox, YouTube, and others are all implementing similar systems. Age verification is becoming table stakes for major platforms serving mixed-age audiences.

Privacy-preserving alternatives are limited: On-device facial estimation is one approach, but it still requires biometric processing. There’s no perfect solution that verifies age without any privacy tradeoff.

Looking Ahead

Discord’s rollout will be one of the largest-scale age verification deployments to date. How it handles edge cases—users without IDs, appeal processes, false positives from the inference model—will set precedents for the industry.

The fundamental tension remains unresolved: platforms are being asked to know their users’ ages without knowing too much about their users. Each technical solution creates new vulnerabilities. Each privacy measure creates verification gaps.

For users, the practical advice is straightforward: understand what data you’re providing and to whom. For the industry, the challenge is deeper: building age verification infrastructure that protects minors without becoming the next breach headline.

The October incident showed what happens when age verification data gets exposed. March will show whether Discord has learned from that lesson—or whether we’re heading toward the same risks at global scale.

Based on analysis of Discord to roll out age verification next month – TechCrunch